|

|

|

Anti Revenge Color

|

|

|

|

|

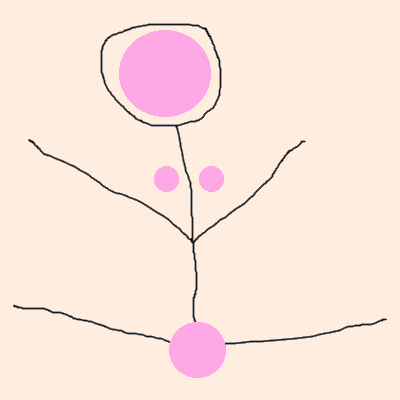

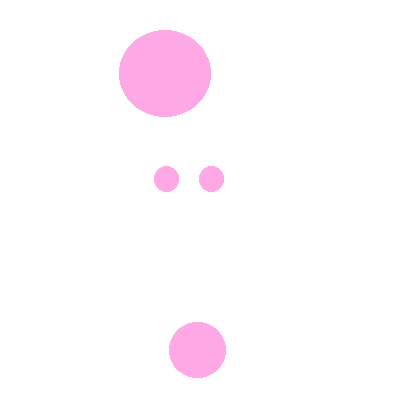

Presumably trying to protect people from having their dignity trashed online by the non-consensual, public release of their nude photos, a social media company has proposed that people themselves upload their nude photos to the company, have some employees look at them, and keep only their hashes on the company's servers. The proposal is ill though and extremely dangerous for the potential victims, and for the employees who may be exposed to a constant stream of such photos. Below is a proper implementation of a protection mechanism for sensitive photos. As a side note for people wondering, it's not useful for people to create the hashes on their computers because that would mean that any photo in the world could be censored because nobody can check beforehand that the photos meet certain criteria. Since the upload of the photos is voluntary, the people who upload them, either those photographed or their guardians, can edit the photos before they upload them to the protection service. First, there should be a standard color to use for editing. Let say that this color is RGB 255-168-230 (hex FFA8E6); let's call this color "Anti Revenge Color", or ARC. Open the photo in a basic image editor, like Paint. Draw a shape (like an oval, circle, square), filled with the ARC color, over the faces and other sensitive areas from the photo. Save the edited photo.

Upload the edited photo to the protection service. If the received photo doesn't contain a significantly large area of pixels with the ARC color, the user may have forgotten to edit the photo, so the service must reject the upload with a message asking the user to edit the image accordingly. An employee can look at the received photo and decide if it's covered by the protection terms. The service can hash the received photo, and keep the hash. The service will later have to be able to recreate the received photo from the original public photo, in order to be able to match their hashes. To do this, the service has to extract (and keep) from the received photo a mask image with only the pixels that have the ARC color.

Since the received photo has the sensitive areas covered, it may also be stored by the service in order to be able to later perform advanced image comparison, not just hash comparison. This would allow the service to detect photos which are edited before they are made public. However, this step should be implemented only in a later stage, maybe as an option for those who choose it. The service can now delete the received photo. When the service goes through the public photos, trying to match their hashes to the stored hashes, it has to place the mask image over each public photo, hash the resulted photo, and search the hash in its database of hashes. This process is much more resource intensive than simply hashing the photos, without the mask, since the service doesn't know which mask matches which public photo, so it has to repeat the process for every mask image that it stores. On the other hand, the service doesn't have to find the sensitive areas to blur. This process should be much easier to accept by both users and employees, and will not risk exposing sensitive details to people who shouldn't see them, either accidental or intentional. |

|

|

|

|

|

|

|

|

|